Introduction

This course/book is designed as your 'second course' in programming. It is assumed you've done a first course which taught you the basics of programming in an interpreted language (probably either python or R), and have now had some experience at using these in your research.

You might have felt that your code is holding you back, and that if you could make it faster or use less memory you could take your analysis further. Or you might have got a solution but are unsure whether it's the right approach, or whether you could make it better.

Sometimes seeing the impressive (but sometimes intractable!) code and methods we use for research in bioinformatics can feel intimidating, and I feel there isn't much formal guidance on how to engineer your code this way.

My hope is that this course will give you some extra skills and confidence when developing and improving your research code.

Content

- Optimising python code. We'll start with some ways to make your interpreted code faster, such as arrays, JIT compilation and sparse matrices.

- HPC. How to effectively use and monitor your computation on HPC systems, including compiling code with different options.

- Compiled languages. How using a statically typed language can be faster, how to control memory, and other pros/and cons (using rust as an example). Also understanding how your problem scales, and typical elements of your algorithmic toolbox to solve problems.

- Software engineering. How to turn your research code into a fully fledged software package.

- Recursion and closures. Some new patterns, with more challenging examples to implement.

- Parallel programming. Theory and examples for using multiple CPUs to parallelise code. Examples in multiple languages.

Possible future content

Future modules to be added (possibly):

- CUDA programming for GPU parallelism.

- Foreign function interfaces - linking your compiled code with R and python.

- Machine learning/deep learning.

- Cloud computing.

- Web development.

Resources

Some of this material is based on:

- 'Algorithms for Modern Hardware'. This goes into lots of detail, including a lot of assembly code. This would be good to read after this course, or if you want more evidence and theory behind optimisation.

- The rust book.

- C++ reference guide.

- Rust by practice

Prerequisites

Prerequisites

Before attending, you should be comfortable writing some code in python or R, including basics:

- Conditionals/if statements.

- Iteration.

- Reading from files, writing to files.

- Taking arguments on the command line.

- Use of functions.

- Data types.

- Data frames/arrays (R) or numpy arrays (python). Indexing into them.

It would also be helpful if you were familiar with:

- Plotting in ggplot2 or matplotlib.

- Some experience/use of functional programming, such as

lapplyin R.

Setup

- If you have a Windows computer, please install Ubuntu through WSL

- If you have a Mac, and it has an M1 chip, that you either have access to codon or you have set up conda with intel packages

- Install vscode, and extensions for python.

Session 2

- Make sure you have an account and can submit jobs on codon (at EBI) or a similar HPC environment at your own institution.

Session 3

- Install the rust toolchain, and check you can compile and run the test program.

- Install the rust-analyzer vscode extension. See the guide here https://code.visualstudio.com/docs/languages/rust.

- Check that you can debug a rust test program in vscode.

Session 4

- Set up a github account. You can get a pro account for free with an EBI email.

- Set up ssh key access for your github account.

Optimising python code

Some guiding principles:

- Only optimise code if you need to. By optimising it, will it be able to run on something you couldn't analyse otherwise? Will it save you more computation than the time it takes to optimise? Will lots of other people use the code, and therefore collectively save time?

- In my experience, going after >10x speedups is usually worth it as it hits the first two objectives. Going after ~2x speedups can often be worth it for commonly used programs, or that you intend to distribute as software. A 10% speedup is rarely worth it for research code -- this would be useful in library code used by thousands of people.

- Get the code to work before optimising it. It's helpful to have a test to ensure any changes you make haven't broken anything.

- Do your optimisation empirically, i.e. use a profiler. We're pretty bad at working out by intuition where the slow parts of the code are.

For now, we'll focus on a python example, but the ideas are similar in R.

- Counting codons

- Profiling

- Optimisation strategies

- Memory optimisation

- Writing extensions in compiled code

- Other optimisations

- Notes (for next year)

Counting codons

The first example we will work through is counting codons from a multiple sequence alignment.

A multiple sequence alignment stored in FASTA format might look something like:

>ERR016988;0_0_13

atgaaaaacactatgtctcctaaaaaaatacccatttggcttaccggcctcatcctactg

attgccctaccgcttaccctgccttttttatatgtcgctatgcgttcgtggcaggtcggc

atcaaccgcgccgtcgaactgttgttccgcccgcgtatgtgggatttgctctccaacacc

ttgacgatgatggcgggcgttaccctgatttccattgttttgggcattgcctgctccctt

ttgttccaacgttaccgcttcttcggcaaaaccttttttcagacggcaatcaccctgcct

ttgtgcatccccgcatttgtcagctgtttcacctggatcagcctgaccttccgtgtcgaa

ggcttttgggggacagtgatgattatgagcctgtcctcgttcccgctcgcctacctgccc

gtcgaggcggcactcaaacgcatcagcctgtcttacgaagaagtcagcctgtccttgggc

aaaagccgcctgcaaacctttttttccgccatcctcccccagctcaaacccgccatcggc

agcagcgtgttactgattgccctgcatatgctggtcgaatttggcgcggtatccattttg

aactaccccacttttaccaccgccattttccaagaatacgaaatgtcctacaacaacaat

accgccgccctgctttccgctgttttaatggcggtgtgcggcatcgtcgtatttggagaa

agcatatttcgcggcaaagccaagatttaccacagcggcaaaggcgttgcccgtccttat

cccgtcaaaaccctcaaactgcccggtcagattggcgcgattgtttttttaagcagcttg

ttgactttgggcattattatcccctttggcgtattgatacattggatgatggtcggcact

tccggcacattcgcgctcgtatccgtatttgatgcctttatccgttccttaagcgtatcg

gctttaggtgcgattttgactatattatgtgccttgccccttgtttgggcatcggttcgc

tatcgcaattttttaaccgtttggatagacaggctgccgtttttactgcacgccgtcccc

ggtttggttatcgccctatccttggtttatttcagcatcaactacacccctgccgtttac

caaacctttatcgtcgtcatccttgcctatttcatgctttacctgccgatggcgcaaacc

accctgaggacttccttggaacaactccccaaagggatggaacaggtcggcgcaacattg

gggcgcggacacttctttattttcaggacgttggtactgccgtccatcctgcccggcatt

accgccgcattcgcactcgtcttcctcaaactgatgaaagagctgaccgccaccctgctg

ctgaccaccgacgatgtccacacactctccaccgccgtttgggaatacacatcggacgca

caatacgccgccgccaccccttacgcgctgatgctggtattattttccggcatccccgta

ttcctgctgaagaaatacgccttcaaataa------------------------

>ERR016989;1_112_17

atgaaaaacactatgtctcctaaaaaaatacccatttggcttaccggcctcatcctactg

attgccctaccgcttaccctgccttttttatatgtcgctatgcgttcgtggcaggtcggc

atcaaccgcgccgtcgaactgttgttccgcccgcgtatgtgggatttgctctccaacacc

ttgacgatgatggcgggcgttaccctgatttccattgttttgggcattgcctgcgccctt

ttgttccaacgttaccgcttcttcggcaaaaccttttttcagacggcaatcaccctgcct

ttgtgcatccccgcatttgtcagctgtttcacctggatcagcctgaccttccgtgtcgaa

ggcttttgggggacagtgatgattatgagcctgtcctcgttcccgctcgcctacctgccc

gtcgaggcggcactcaaacgcatcagcctgtcttacgaagaagtcagcctgtccttgggc

aaaagccgcctgcaaacctttttttccgccatcctcccccagctcaaacccgccatcggc

agcagcgtgttactgattgccctgcatatgctggtcgaatttggcgcggtatccattttg

aactaccccacttttaccaccgccattttccaagaatacgaaatgtcctacaacaacaat

accgccgccctgctttccgctgttttaatggcggtgtgcggcatcgtcgtatttggagaa

agcatatttcgcggcaaagccaagatttaccacagcggcaaaggcgttgcccgtccttat

cccgtcaaaaccctcaacctccccggtcagattggcgcgattgtttttttaagcagcttg

ttgactttgggcattattatcccctttggcgtattgatacattggatgatggtcggcact

tccggcacattcgcgctcgtatccgtatttgatgcctttatccgttccttaagcgtatcg

gctttaggtgcggttttgactatattatgtgccttgccccttgtttgggcatcggtccgc

tatcgcaattttttaaccgtttggatagacaggctgccgtttttactgcacgccgtcccc

ggtttggttatcgccctatccttggtttatttcagcatcaactacacccctgccgtttac

caaacctttatcgtcgtcatccttgcctatttcatgctttacctgccgatggcgcaaacc

accctaaggacttccttggaacaactcccaaaagggatggaacaggtcggcgcaacattg

gggcgcggacacttctttattttcaggacgttggtactgccgtccatcctgcccggcatt

accgccgcattcgcactcgtcttcctcaaactgatgaaagagctgaccgccaccctgctg

ctgaccaccgacgatgtccacacactctccaccgccgtttgggaatacacatcggacgca

caatacgccgccgccaccccttacgcgctgatgctggtattattttccggcatccccgta

ttcctgctgaagaaatacgctttcaaataa------------------------

This format has sample headers which start > followed by the sample name, followed

by the sequence, wrapped at 80 characters to make it more human readable. Gaps are given by - characters.

We wish to count, at each aligned position, the number of observed codons for each of the 61 possible triplet positions plus the three stop codons TAG, TAA and TGA.

We'll use an example with aligned porB sequences from the bacterial pathogen Neisseria meningitidis, which you can download here.

Exercise Let's start off by writing some code in to read this file in and doing a simple check that the length is as expected (all the same, and a multiple of three). Let's also add some timing in, to see if we are improving when we add optimisations.

Have a go at this yourself, and then compare with the example below.

Example: read file

import time

import gzip

# Generator which keeps state after yield is called

# Based on a nice example: https://stackoverflow.com/a/7655072

def read_fasta_sample(fp):

name, seq = None, []

for line in fp:

line = line.rstrip()

if line.startswith(">"):

if name: yield (name, ''.join(seq).upper())

name, seq = line[1:], []

else:

seq.append(line)

if name: yield (name, ''.join(seq).upper())

def read_file(file_name):

n_samples = 0

# Open either gzipped or plain file by looking for magic number

# https://stackoverflow.com/a/47080739

with open(file_name, 'rb') as test_f:

zipped = test_f.read(2) == b'\x1f\x8b'

if zipped:

fh = gzip.open(file_name, 'rt')

else:

fh = open(file_name, 'rt')

with fh as fasta:

seqs = list()

names = list()

for h, s in read_fasta_sample(fasta):

if len(s) % 3 != 0:

raise RuntimeError(f"Sequence {h} is not a multiple of three")

elif len(seqs) > 0 and len(s) != len(seqs[0]):

raise RuntimeError(f"Sequence {h} is length {len(s)}, expecting {len(seqs[0])}")

else:

seqs.append(s)

names.append(h)

return seqs, names

def main():

start_t = time.time_ns()

seqs, names = read_file("BIGSdb_024538_1190028856_31182.dna.aln.gz")

end_t = time.time_ns()

time_ms = (end_t - start_t) / 1000000

print(f"Time to read {len(seqs)} samples: {time_ms}ms")

if __name__ == "__main__":

main()

Don't worry about perfecting your code at this point! Let's aim for something that runs easily and works, which we can then improve if we end up using it.

In the example above I have added a timer so we can try and keep track of how long

each section takes to run. A better way to do this would be to average over multiple

runs, for example using the timeit module, but some measurement is better than no

measurement.

I've made sure all the bases are uppercase so we don't have to deal with both

a and A in our counter, but I haven't checked for incorrect bases.

When I run this code, I see the following:

python example1.py

Time to read 4889 samples: 59.502ms

If I decompress the file first:

python example1.py

Time to read 4889 samples: 33.419ms

If you have a hard disk drive rather than a solid state drive, it is often faster to read compressed data and decompress it on the fly, as the speed of data transfer is the rate limiting step.

Exercise Now, using the sequences, write some code to actually count the codons. The output

should be a frequency vector at every site for every codon which does not

contain a -. Do this however you like.

Example: count codons

def count_codons(seqs):

bases = ['A', 'C', 'G', 'T']

codon_len = int(len(seqs[0]) // 3)

counts = [dict()] * codon_len

for seq in seqs:

for codon_idx in range(codon_len):

nuc_idx = codon_idx * 3

codon = seq[nuc_idx:(nuc_idx + 3)]

if all([base in bases for base in codon]):

if codon in counts[codon_idx]:

counts[codon_idx][codon] += 1

else:

counts[codon_idx][codon] = 1

count_vec = list()

for pos in counts:

pos_vec = list()

for base1 in bases:

for base2 in bases:

for base3 in bases:

codon = ''.join(base1 + base2 + base3)

if codon in pos:

pos_vec.append(pos[codon])

else:

pos_vec.append(0)

return count_vec

Aside: debugging

My first attempt (above) was wrong and was returning an empty list. One way to look into this is by using a debugger.

Modern IDEs such as vscode and pycharm also let you run the debugger interactively, which is typically nicer than via the command line. However old school debugging is handy when you have to run your code on HPC systems, or with more complex projects such as when you have C++ and python code interacting.

python has pdb built-in, you can run it as follows:

python -m pdb example1.py

(gdb and lldb are equivalent debuggers for compiled code.)

Run help to see the commands. Most useful are setting breakpoints with b;

continuing execution with c, r, s and n; printing variables with p.

By stopping my code on the line with codon = ''.join(base1 + base2 + base3)

by typing b 62 to add a breakpoint, then r to run the code, I

can have a look at some counts:

p pos

{'ATG': 15401, 'TGA': 47348, 'GAA': 39489, 'AAA': 85118, 'AAT': 30105, 'ATC': 36135, 'TCC': 24440, 'CCC': 22958, 'CCT': 36610, 'CTG': 41756, 'GAT': 10002, 'ATT': 20458, 'TTG': 49731, 'TGC': 21500, 'GCC': 40734, 'GAC': 20218, 'ACT': 19778, 'CTT': 28321, 'TTT': 20797, 'TGG': 29906, 'GGC': 67370, 'GCA': 45599, 'CAG': 23779, 'AGC': 34794, 'TTC': 29469, 'TGT': 9964, 'GTT': 39620, 'CAA': 42858, 'GCT': 30373, 'ACG': 15084, 'CGT': 23916, 'TTA': 9198, 'TAC': 21812, 'ACC': 30370, 'GTA': 33903, 'CGG': 62505, 'CAC': 11408, 'CCA': 20119, 'CAT': 22822, 'TCA': 20719, 'AAG': 42061, 'CCG': 35604, 'GCG': 24592, 'TAG': 10002, 'AGA': 17961, 'AAC': 28534, 'CGC': 21035, 'CTC': 19077, 'TCT': 13737, 'GAG': 4230, 'ACA': 15865, 'GGA': 5578, 'GGT': 42665, 'GTC': 11842, 'AGG': 21378, 'GTG': 1830, 'TCG': 37628, 'GGG': 13214, 'TAA': 18253, 'CTA': 9810, 'TAT': 6398, 'ATA': 2220, 'CGA': 5452, 'AGT': 5939}

For starters, these seem far too high, as we wouldn't expect counts over the number of samples (4889).

Stopping at codon = seq[codon_idx:(codon_idx + 3)] I see:

(Pdb) p codon

'ATG'

This looks ok. I'll step through some of the next lines with n:

if codon in counts[codon_idx]:

(Pdb) p counts

[{}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}

, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {

}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {},

{}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {},

{}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}

, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {

}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {},

{}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {},

{}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}, {}

, {}, {}, {}, {}, {}, {}, {}, {}, {}]

Initialisation looks ok

> example1.py(51)count_codons()

-> counts[codon_idx][codon] = 1

(Pdb) n

> example1.py(45)count_codons()

-> for codon_idx in range(codon_len):

(Pdb) p counts

[{'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG'

: 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {

'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG':

1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'A

TG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}

, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG

': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1},

{'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG':

1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'

ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1

}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'AT

G': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1},

{'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG'

: 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {

'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG':

1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'A

TG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}

, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG

': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1},

{'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG':

1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'

ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1

}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'AT

G': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1},

{'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG'

: 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {

'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG':

1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}, {'ATG': 1}]

Ah, here's one problem. My initialisation of counts looked like counts = [dict()] * codon_len. What this

has actually done is initialised a single dictionary and copied a reference to it into every item of the list,

so when I update one reference only one underlying dictionary is operated on.

NB: we'll cover copying versus references in more detail later, but for now you can think of most python variables as a 'label' which points to a bit of memory containing the data. If you would like more information see this tutorial and the copy module.

I can fix this by using a list

comprehension counts = [dict() for x in range(codon_len)] instead.

p pos

{'ATG': 4889}

Looks good.

Stepping through further it looks like I have forgotten to append each codon's vector to the overall return. So the fixed function looks like this:

Fixed codon count

def count_codons(seqs):

codon_len = int(len(seqs[0]) // 3)

counts = [dict() for x in range(codon_len)]

for seq in seqs:

for codon_idx in range(codon_len):

codon = seq[codon_idx:(codon_idx + 3)]

if not '-' in codon:

if codon in counts[codon_idx]:

counts[codon_idx][codon] += 1

else:

counts[codon_idx][codon] = 1

bases = ['A', 'C', 'G', 'T']

count_vec = list()

for pos in counts:

pos_vec = list()

for base1 in bases:

for base2 in bases:

for base3 in bases:

codon = ''.join(base1 + base2 + base3)

if codon in pos:

pos_vec.append(pos[codon])

else:

pos_vec.append(0)

count_vec.append(pos_vec)

return count_vec

This still takes around 300ms and gives what looks like a plausible result at first glance.

Exercise. Plot your results, and perhaps also plot a summary like the codon diversity at each site (one simple way is with entropy but ecological indices are typically better for count data).

You could use matplotlib or plotly for plotting,

or read the output into R and use {ggplot2}.

Now that we have this baseline which are confident in, let's add a very simple test so when we add other functions we can check it's still working:

assert(codons_new = codons_correct)

A better approach would be to use a smaller dataset which we can write the result out for, and then add in more test cases. More on this in the software engineering module, but for now this is a sensible basic check.

Profiling

Is 300ms fast or slow? Should we optimise this? We'll go into this more in the complexity section, but we can probably guess that the code will take longer with both more sequences \(N\) and more codon positions \(M\), likely linearly.

In the above code, the file reading needs to read and process \(NM\) bases,

the first loop in count_codons() is over \(N\) sequences then \(M\) codon

positions with a constant update inside this, the second loop is over \(M\) positions

and 64 codons. So it would appear we can expect the scale of \(NM\) to determine

our computation.

So if we had 10000 genes, each around 1kb to do this with, and one million samples we'd expect it to take around \(10^4 \times \frac{10^3 \times 10^6}{4889 * 403}\) longer, so around 150 seconds, still pretty fast.

Using the principles above, even in this expanded case this probably isn't a good optimisation target. But, for the purposes of this exercise, let's assume we were working with reams and reams of sequence continuously, so that it was worth it. How should we start?

Well, the timings we have put in are already helpful. It looks like we're spending about 15% of the total time reading sequence in, and the remaining 85% counting the codons. So the latter part is probably a better target. This is a top-down approach to profiling and optimisation.

An alternative approach is to find 'hot' functions -- lower level parts of the code which are frequently called by many functions, and together represent a large amount of the execution time. This is a bottom-up approach.

Using a profiler can help you get a much better sense of what to focus on when you want to make your code faster. For compiled code:

- On OS X you can use 'Instruments'

- the Intel OneAPI has 'vtune' which is now free of charge.

- Rust has

flamegraphwhich is very easy to use. - The classic choice is

gprof, but it is more limited than these options.

Options for python don't usually manage to drill down to quite the same level. But

it's simple enough to get started with cProfile as follows:

python -m cProfile -o cprof_results example1.py

See the guide on how to use the

pstats module to view the results.

Another choice is line_profiler, which

works using @profile decorators.

Exercise. Profile your code with one of these tools. What takes the most time? Where would you focus your optimisation?

Optimisation strategies

The rest of this chapter consists of some general strategies for speeding your code up, once you have determined the slow parts. These aren't exhaustive.

Use a better algorithm or data structure

If you can find a way to do this, you can sometimes achieve orders of magnitude speedups. There are some common methods you can use, some of which you'll already be very familiar with. Often this is a research problem in its own right.

Look at the following code which counts codons. Although this looks like fairly reasonable code, it is about 50x slower than the approach above.

def count_codons_list(seqs):

codon_len = int(len(seqs[0]) // 3)

counts = list()

# For each sequence

for seq in seqs:

# For each codon in each sequence

for codon_idx in range(codon_len):

# Extract the codon and check it is valid

codon = seq[codon_idx:(codon_idx + 3)]

if not '-' in codon:

# Name codons by their position and sequence

codon_name = f"{codon_idx}:{codon}"

# If this codon has already been seen, increment its count,

# otherwise add it with a count of 1

found = False

for idx, entry in enumerate(counts):

name, count = entry

if name == codon_name:

counts[idx] = (name, count + 1)

found = True

break

if not found:

counts.append((codon_name, 1))

# Iterate through all the possible codons and find their final counts

bases = ['A', 'C', 'G', 'T']

count_vec = list()

for pos in range(codon_len):

pos_vec = list()

for base1 in bases:

for base2 in bases:

for base3 in bases:

codon_name = f"{pos}:{base1}{base2}{base3}"

found = False

for idx, entry in enumerate(counts):

name, count = entry

if name == codon_name:

pos_vec.append(count)

found = True

break

if not found:

pos_vec.append(0)

count_vec.append(pos_vec)

return count_vec

Even for this example optimisation becomes worth it. The code now takes 13s to run, so on the larger dataset it would take at least two hours. (But actually the scaling here is quadratic in samples and positions, so it would be more like 33 years! Now it's really worth improving).

Exercise Why is it slow? How would you improve it by using a different python data structure. Think about using profiling to guide you, and an assert to check your results match

Avoiding recomputing results

If you can identify regions where you are repeatedly performing the same computation,

you can move these out of loops and calculate them just once at the start. In compiled

languages, it is sometimes possible to generate constant values at compile time so

they just live in the program's code and they are never computed at run time (e.g.

with constexpr or by writing out values by hand).

In the above code, we could avoid regenerating the codon keys:

bases = ['A', 'C', 'G', 'T']

codons = list()

for base1 in bases:

for base2 in bases:

for base3 in bases:

codon = ''.join(base1 + base2 + base3)

codons.append(codon)

for pos in counts:

pos_vec = list()

for codon in codons:

if codon in pos:

pos_vec.append(pos[codon])

else:

pos_vec.append(0)

count_vec.append(pos_vec)

Using timeit to benchmark this against the function above (excluding set-up

and the filling of counts which stays the same), run 1000 times:

fn1: 5.23s

fn2: 1.98s

A ~2.5x speedup, not bad.

We could also just write out a codon array by hand in the code. Although in this case, it's probably less error-prone to generate it using the nested for loops, the time taken to generate it is minimal. A case where this is useful are precomputing tails of integrals, which are commonly used in random number generation.

Using arrays and preallocation

In python, numpy is an incredibly useful package for many types of scientific data,

and is very highly optimised. The default is to store real numbers (64-bit floating point,

but this can be changed by explicitly settting the dtype.) In particular, large 2D arrays are almost always best in this format.

These also work well with pandas to add metadata annotations to the data, scipy and numpy.linalg

functions. Higher dimensions can also be used.

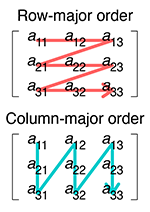

Comparing an array to a list of lists, data is written into one dimensional memory as follows:

matrix:

0.2 0.4 0.5

0.3 0.1 0.2

0.1 0.9 1.2

memory (row-major/C-style):

0.2 0.4 0.5 0.3 0.1 0.2 0.1 0.9 1.2

memory (col-major/F-style):

0.2 0.3 0.1 0.4 0.1 0.9 0.5 0.2 1.2

list of lists:

address1 0.2 0.4 0.5

...

address2 0.3 0.1 0.2

...

address3 0.1 0.9 1.2

Row and column major order by Cmglee, CC BY-SA 4.0

The list of lists may have the rows separated in memory, they are written wherever

there is space. The arrays in numpy are guaranteed to be contiguous. The data

can be written across rows (row-major), or down the columns (col-major). To access

a value, internally numpy's code looks something like this:

val = arr[i * i_stride + j * j_stride];

For row-major data, i_stride = 1 and j_stride = n_columns. For col-major data

i_stride = n_rows and j_stride = 1. This can be extended into N dimensions

by adding further strides.

NB In R you can use the built-in array type, with any vector of dimensions dim. The indexing

is really intuitive and gives fast code. It's one of my favourite features of the

language. Note there is also a matrix type, which uses exactly two dimensions and silently

converts all entries to the same type -- use with care (particularly with apply, vapply is

often safer).

Why are numpy arrays faster?

- No type checking, as you're effectively using C code.

- Less pointer chasing as memory is contiguous (see compiled languages).

- Optimisations such as vectorised instructions are possible for the same reason.

- Functions to work with the data have been optimised for you (particularly linear algebra). And in some cases preallocation of the memory is faster than dynamically sized lists (though in python this makes less of a difference as lists are pretty efficient).

Simple matrix multiplication order in memory by Maxiantor, CC BY-SA 4.0

Let's compare summing values in a list of lists to summing a numpy array:

import numpy as np

def setup():

xlen = 1000

ylen = 1000

rand_mat = np.random.rand(xlen, ylen)

lol = list()

for x in range(xlen):

sublist = list()

for y in range(ylen):

sublist.append(rand_mat[x, y])

lol.append(sublist)

return rand_mat, lol

def sum_mat(mat):

return np.sum(mat)

def sum_lol(lol):

full_sum = 0

for x in range(len(lol)):

for y in range(len(lol[x])):

full_sum += lol[x][y]

return full_sum

def main():

import timeit

rand_mat, lol = setup()

print(sum_mat(rand_mat))

print(sum_lol(lol))

setup_str = '''

import numpy as np

from __main__ import setup

from __main__ import sum_mat

from __main__ import sum_lol

rand_mat, lol = setup()

'''

print(timeit.timeit("sum_mat(rand_mat)", setup=setup_str, number=100))

print(timeit.timeit("sum_lol(lol)", setup=setup_str, number=100))

if __name__ == "__main__":

main()

Which gives:

numpy sum: 500206.0241503447

list sum: 500206.0241503486

100x numpy sum time: 0.019256124971434474

100x list sum time: 9.559518916998059

A 500x speedup! Also note the results aren't identical, the implementations of sum cause slightly different rounding errors.

We can do a bit better using fsum:

def sum2_lol(lol):

from math import fsum

row_sum = [fsum(x) for x in lol]

full_sum = fsum(row_sum)

return full_sum

This takes about 2s (5x faster than the original, 100x slower than numpy) and gives a more precise result.

There are also advantages to preallocating memory space too, i.e. giving the size of the data first, then writing into the structure using appropriate indices. With the codon counting example, that might look like this:

def count_codons_numpy(seqs):

# Precalculate codons and map them to integers

bases = ['A', 'C', 'G', 'T']

codon_map = dict()

idx = 0

for base1 in bases:

for base2 in bases:

for base3 in bases:

codon = ''.join(base1 + base2 + base3)

codon_map[codon] = idx

idx += 1

# Create a count matrix of zeros, and write counts directly into

# the correct entry

codon_len = int(len(seqs[0]) // 3)

counts = np.zeros((codon_len, len(codon_map)), np.int32)

for seq in seqs:

for codon_idx in range(codon_len):

codon = seq[codon_idx:(codon_idx + 3)]

if not '-' in codon:

counts[codon_idx, codon_map[codon]] += 1

return counts

This is actually a bit slower than the above code as the codon_map means

accesses move around in memory rather than being to adjacent locations (probably

causing cache misses, but I haven't checked this.)

Sparse arrays

When you have numpy arrays with lots of zeros, as we do here, it can

be advantageous to use a sparse array. The basic idea is that rather than storing

all the zeros in memory, where we do have a non zero value (e.g. a codon count)

we store the value, its row and column position in the matrix. As well as saving

memory, some computations are much faster with sparse rather than dense arrays. They

are frequently used in machine learning applications. For example see lasso regression which can use a sparse array

as input, and jax for

use in deep learning.

You can convert a dense array to a sparse array:

sparse_counts = scipy.sparse.csc_array(counts)

print(sparse_counts)

(3, 0) 4889

(4, 0) 4889

(5, 0) 4889

(6, 0) 4889

(81, 0) 4889

(82, 0) 613

(94, 0) 3281

...

In python you can use scipy.sparse.coo_array to build a sparse array, then convert it into csr or csc form

to use in computation.

In R you can use dgTMatrix,

and convert to dgCMatrix for computation. The glmnet package works well with this.

Just-in-time compilation

We mentioned above one of the reasons python code is not as fast as the numpy equivalent is due to the type checking that is needed when it is run. One way around this is with 'just-in-time compilation', where python bytecode is compiled to (fast) machine code at runtime and before a function executes, and checking of types is done once when the function is first compiled.

One package that can do this fairly easily is numba.

numba works well with simple numerical code like the sum above, but less well

with string processing, data frames or other packages. It can even run some code

on CUDA GPUs (I haven't tried it -- a quick look suggests it is somewhat fiddly but actually

quite feature-complete).

In many cases, you can run numba by adding an @njit decorator to your function.

NB I would always recommend using nopython=true or equivalently @njit as you otherwise you won't see when numba is failing to compile.

Exercise: Try running the sum_lol() and sum_lol2() functions using numba.

Time them. Run them twice and time each run. Note that fsum won't work, but you

can use sum. You will also want to use List from numba.

Expand the section below when you have tried this.

Sums with numba

import numpy as np

from numba import njit

from numba.typed import List

def setup():

xlen = 1000

ylen = 1000

rand_mat = np.random.rand(xlen, ylen)

lol = List()

for x in range(xlen):

sublist = List()

for y in range(ylen):

sublist.append(rand_mat[x, y])

lol.append(sublist)

return rand_mat, lol

@njit

def sum_mat(mat):

return np.sum(mat)

@njit

def sum_lol(lol):

full_sum = 0

for x in range(len(lol)):

for y in range(len(lol[x])):

full_sum += lol[x][y]

return full_sum

@njit

def sum2_lol(lol):

row_sum = [sum(x) for x in lol]

full_sum = sum(row_sum)

return full_sum

def main():

import timeit

rand_mat, lol = setup()

print(sum_mat(rand_mat))

print(sum_lol(lol))

setup_str = '''

import numpy as np

from __main__ import setup

from __main__ import sum_mat, sum_lol, sum2_lol

rand_mat, lol = setup()

# Compile the functions here

run1 = sum_mat(rand_mat)

run2 = sum_lol(lol)

run3 = sum2_lol(lol)

'''

print(timeit.timeit("sum_mat(rand_mat)", setup=setup_str, number=100))

print(timeit.timeit("sum_lol(lol)", setup=setup_str, number=100))

print(timeit.timeit("sum2_lol(lol)", setup=setup_str, number=100))

if __name__ == "__main__":

main()

This now gives the same result between both implementations:

500032.02596789633

500032.02596789633

and the numba variant using the built-in sum is a similar speed to numpy (time in s):

sum_mat 0.09395454201148823

sum_lol 2.7725684169563465

sum2_lol 0.08274500002153218

We can also try making our own array class with strides rather than using lists of lists:

@njit

def sum_row_ar(custom_array, x_size, x_stride, y_size, y_stride):

sum = 0.0

for x in range(x_size):

for y in range(y_size):

sum += custom_array[x * x_stride + y * y_stride]

return sum

This about twice as fast as the list of list sum (1.4s), but still a lot slower than the built-in sums which can benefit from other optimisations.

Working with characters and strings

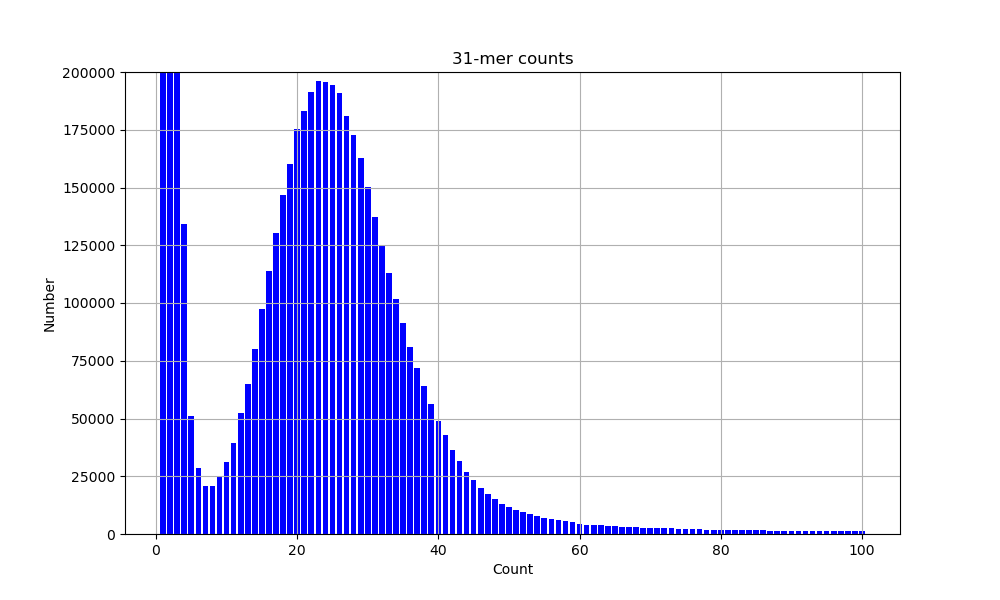

Needing arrays of characters (aka strings) is very common in sequence analysis, particularly with genomic data. It's very easy to work with strings in python (less so in R), but not always particularly fast. If you need to optimise string processing ultimately you may end up having to use a compiled language -- we will work through an example counting substrings (k-mers) from sequence reads in a later chapter.

That being said, numpy does have some support for working with strings by treating

them as arrays of characters. Characters are usually

coded as ASCII, which

uses a single byte (8 bits: np.int8/u8/char/uint8_t depending on language).

Note that unicode, which is crucially needed for encoding emojis 🕴️ 🤯 🐈⬛ 🦉 and therefore essential to any modern research code, is a little more complex as it is variable length and uses an terminating character.

We can read our string directly into a numpy array of bytes as follows:

s = np.frombuffer(s.lower().encode(), dtype=np.int8)

Genome data often has upper and lowercase bases (a or A, c or C and so on) with no particular meaning assigned to this difference. It's useful to convert everything to a single case for consistency.

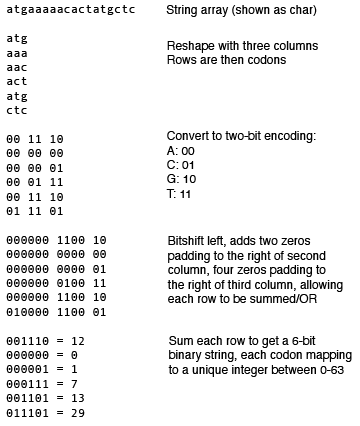

Here is an example converting the bases {a, c, g, t} into {0, 1, 2, 3}/{b00, b01, b10, b11}

and using some bitshifts to do the codon counting:

@njit

def inc_count(codon_map, X):

for idx, count in enumerate(codon_map):

X[count, idx] += 1

def count_codons(seqs, n_codons):

n_samples = 0

X = np.zeros((65, n_codons), dtype=np.int32)

for s in seqs:

n_samples += 1

s = np.frombuffer(s.lower().encode(), dtype=np.int8).copy()

# Set ambiguous bases to a 'bin' index

ambig = (s!=97) & (s!=99) & (s!=103) & (s!=116)

if ambig.any():

s[ambig] = 64

# Shape into rows of codons

codon_s = s.reshape(-1, 3).copy()

# Convert to usual binary encoding

codon_s[codon_s==97] = 0

codon_s[codon_s==99] = 1

codon_s[codon_s==103] = 2

codon_s[codon_s==116] = 3

# Bit shift converts these numbers to a codon index

codon_s[:, 1] = np.left_shift(codon_s[:, 1], 2)

codon_s[:, 2] = np.left_shift(codon_s[:, 2], 4)

codon_map = np.fmin(np.sum(codon_s, 1), 64)

# Count the codons in a loop

inc_count(codon_map, X)

# Cut off ambiguous

X = X[0:64, :]

return X, n_samples

Overview of the algorithm

Overview of the algorithm

This also checks for any non-acgt bases and puts them in an imaginary 65th codon,

which acts as a bin for any unobserved data. Stops can also be removed. A numba

loop is used to speed up the final count.

Overall this is about 50% faster than the dictonary implementation for this size of data, but is quite a bit more complex and likely error-prone. This type of processing can become worth it when the strings are larger, as we will see.

Alternative python implementations

We can also try alternative implementations of the python interpreter over the

standard CPython. A popular one is pypy which can

be around 10x faster for no extra effort. You can install via conda.

On my system for the sum benchmake, this ended up being 3-10x slower than CPython:

sum_lol 36.7518346660072

sum2_lol 56.79283958399901

but for the codon counting benchmark, was about twice as fast:

fn1: 2.63s

fn2: 0.83s

It costs almost nothing to try in many cases, but make sure you take some measurements.

Memory optimisation

It's often the case that you want to reduce memory use rather than increase speed. Similar tools can be used for memory profiling, however in python this is more difficult. If memory is an issue, using a compiled language where you have much more control over allocation and deallocation, moving and copying usually makes sense.

In python, using preallocated arrays can help you control and predict memory, and ensuring you don't copy large objects or delete them after use is also a good start.

Some other tips:

- Avoid reading everything into memory and compute 'on the fly'. The above code reads in all of the sequences first, then processes them one at a time. These could be combined, so codons counts are updated after each sequence is read in, so then only one sequence needs to fit in memory.

- You can use disk (i.e. files) to move objects in and out of memory, at the cost of time.

- Memory mapping large files you wish to modify part of can be an effective approach.

Writing extensions in compiled code

It is possible to write C/C++ code and call it from python using Cython. Instead,

I would highly recommend either the pybind11

or nanobind packages which make this a lot easier.

The tricky part is writing your setup.py and Makefile/CMakeLists.txt to get

everything to work together. If you are interested in this approach, examples from

some of our group's packages may be helpful:

sketchlibcalls C++ and CUDA code from python.mandrakedoes the same, but also returns an object used in the python code.ggcalleruses both python and C++ code throughout.

Please feel free to adapt and reuse the build approach taken in any of these.

Other optimisations

For data science, have a look at the following packages for tabular data:

cuDF- GPU accelerated data frames: https://docs.rapids.ai/api/cudf/stable/- Apache arrow and Parquet format: https://arrow.apache.org/docs/python/index.html

Notes (for next year)

- Code should probably be given, rather than written

- This isn't doable for R users

- 1st part unclear what should be read/checked

- Which reading frames

- Snakeviz a good tool for visualisation of profiles

- Better examples of fasta and expected output

- Bitshift example is the wrong way round

HPC

In this session we will look at running code on compute clusters.

An initial consideration is always going to be 'when should I run code on the cluster rather than my laptop?'. I think the following are the main reasons:

- Not enough resources on your machine (e.g. not enough memory, needs a high-end GPU).

- Job will take longer than you want to leave you machine on for.

- Running many jobs in parallel.

- Using data which is more easily/only accessed through cluster storage.

- A software installation which works more easily on cluster OS (e.g. linux rather than OS X).

- Benchmarking code.

Of course it's almost always easier to run code on your local machine if you can avoid these cases. Weighing up effort to run on the HPC versus the effort of finding workarounds to run locally is hard. Making the HPC as painless as possible, while acknowledging there will always be some pain, is probably the best approach.

Particular pain-points of using the HPC can be:

- Clunky interface, terminal only.

- Waiting for your jobs to run.

- Running jobs interactively.

- Installing software.

- Troubleshooting jobs or getting code to run.

- Running many jobs at once and resubmitting failed jobs.

- Making your workflow reproducible.

I've tried to present solutions to these in turn, including three exercises:

- Estimating resource use.

- Installing software without admin rights.

- Running a job array.

Some of this will be specific to the current setup of the EBI codon cluster. I'm sticking with LSF for now -- a change to SLURM is planned because apparently it has better metrics centrally. Similar principles apply as with LSF, some features do change. For this year cloud computing won't be covered (sorry), but I can try and add this in future. So the topics for today:

- Maximising your quality of life on the HPC

- LSF basics (recap)

- Getting jobs to run and requesting resources

- Interactive jobs

- Installing software without admin rights

- Troubleshooting jobs and prototyping code

- Job arrays

- Reproducibility on the HPC

Maximising your quality of life on the HPC

There are some basic tools you can use for bash/zsh and Unix which will make your time on the HPC less unpleasant. I'll mention them here -- if they look useful try them out after the class.

If anyone has further suggestions, now is a good time to discuss them.

tmux

This is my biggest piece of advice in this section: always use tmux to maintain

your session between log ins. (You may have used screen before, tmux has more

features and is universally available).

The basic usage is:

- When you log in for the first time, type

tmux new(ortmux new zshif you wanted to run a specific terminal). - This is your session, run commands as normal within the interface.

- When it's time to log out, press

Ctrl-bthend(for detach). - Next time you log in, type

tmux attachto return to where you were. - Use

Ctrl-bthencto make a new tab.Ctrl-band a number or arrow to switch between. - Use

Ctrl-bthen[then arrows to scroll (but easier to make this the mouse as normal through config file).

If you crash/lose connection the session will still be retained. Sessions are lost

when the node reboots. Also note, you need to specify a specific node e.g. codon-login-01

rather than the load-balancer codon-login when using tmux.

There are many more features available if you edit the ~/.tmux.conf file. In particular

it's useful to turn the mouse on so you can click between panes and scroll as normal.

Here's an old one of mine with more

examples.

Background processes

Less useful on a cluster, but can be if you ever use a high-performance machine without a queuing system (e.g. openstack, cloud).

Ctrl-z suspends a process and takes you back to the terminal. fg to foreground (run

interactively), bg to keep running but keep you at the prompt. See jobs to look

at your processes.

You can add an ampersand & at the end of a command and it will run in the background

without needing to suspend first. nohup will keep running even when you log out

(not necessary if you are using tmux).

Text editors

Pick one of vi/vim/gvim/neovim or emacs and learn some of the basic commands.

Copying and pasting text, find and replace, vertical editing and repeating custom commands

are all really useful to know. Once you're used to them, the extensions for VSCode which

let you use their commands are very powerful.

Although, in my opinion, they don't replace a modern IDE such as VSCode every system has them installed and it's very useful to be able to make small edits to your code or other files. Graphical versions are also available.

Terminal emulators

Impress your friends and make your enemies jealous by using a non-default terminal emulator. Good ones:

zsh and oh-my-zsh

I personally prefer zsh over bash because:

- History is better. Type the start of a command, then press up and you'll get the commands you've previously used that started like that. For example, type

lsthen press up. - The command search

Ctrl-Ris also better. - Keep pressing tab and you'll see options for autocomplete.

I'm sure there are many more things possible.

zsh is now the default on OS X. You can change shell with chsh, but you usually

need admin rights. On the cluster, you can just run zsh to start a new session,

but if you're using tmux you'll pop right back into the session anyway.

You can also install oh-my-zsh which has some nice extensions, particularly for within git directories. The little arrow shows you whether the last command exited with success or not.

Enter-Enter-Tilde-Dot

This command exits a broken ssh session. See here for more ssh commands.

Other handy tools I like

agrather thangrep.fdrather thanfind.fzffor searching through commands. Can be integrated withzsh.

While I'm writing these out, can't resist noting one of my favourite OS X commands:

xattr -d "com.apple.quarantine" /Applications/Visual\ Studio\ Code.app

Which gets rid of the stupid lock by default on most executables on OS X.

LSF basics (recap)

- Submit jobs with

bsub. - Check your jobs with

bjobs.bjobs -dto include recently finished jobs. - View the queues with

bqueues. Change jobs withbswitch. bstop,bresume,brestartto manage running jobs. End jobs withbkill.bhostsandlshoststo see worker nodes.bmodto change a job.

Most of these you can add -l to get much more information. bqueues -l is interesting

as it shows your current fair-share priority (lower if you've just run a lot of jobs).

There are more, but these are the main ones. Checkpointing doesn't work well, as far as I know.

Getting jobs to run and requesting resources

It's frustrating when your jobs take a long time to run. Requesting the right amount of resources both helps your job find a slot in the cluster to run, and lowers the load overall helping everyone's jobs to run.

That being said, I don't want to put anyone off experimenting or running code on the HPC. Don't worry about trying things out or if you get resource use wrong. Over time improving your accuracy is worthwhile, but it's always difficult with a new task -- very common in research! We now have some nice diagnostics and history here I'd recommend checking out periodically to keep track of how you're doing: http://hoxa.windows.ebi.ac.uk:8080/.

The basic resources for each CPU job are:

- CPU time (walltime)

- Number of CPU cores

- Memory

/tmpspace

Though the last of these isn't usually a concern, we have lots of fast scratch space you can point to if needed. Disk quotas are managed outside of LSF and I won't discuss them here.

When choosing how much of these resources to request, you want to avoid:

- The job failing due to running out of resources.

- Using far less than the requested resources.

- Long running jobs.

- Inefficient resource use (e.g. partial parallelisation, peaky maximum memory).

- Requesting resources which are hard to obtain (many cores, long run times, lots of memory, lots of GPUs).

- Lots of small jobs.

- Inefficient code.

Typically 1) is worse than 2). Those resources were totally wasted rather than partially wasted. We will look at this in the exercise.

Long running jobs are bad because failure comes at a higher cost, and it makes the cluster use less able to respond to dynamic demand. Considering adding parallelisation rather than a long serial job if possible, or adding some sort of saving of state so jobs can be resumed.

You can't usually do much about the root causes of 4) unless you wrote the code yourself. You will always need a memory request which is at least the maximum demand (you can have resource use which changes over the job, but it's not worth it unless e.g. you are planning the analysis of a biobank). Inefficient parallel code on a cluster may be better as a serial job. Note that if you don't care about the code finishing quickly it's always more efficient in CPU terms to run them on a single core. For example, if you have 1000 samples which you wished to run genome assembly on would you run:

- 1000 single-threaded jobs

- 1000 jobs with four threads

- 1000 jobs with 32 threads?

(we will discuss this)

-

can sometimes be approached by using job arrays or trading off your requests. Sometimes you're just stuffed.

-

is bad because of the overhead of scheduling them. Either write a wrapper script to run them in a loop, or use the

shortqueue. -

at some point it's going to be worth turning your quickly written but slow code into something more efficient, as per the previous session. Another important effect can come from using the right compiler optimisations, which we will discuss next week.

Anatomy of a bsub

Before the exercise, let's recap a typical bsub command:

bsub -R "select[mem>10000] rusage[mem=10000]" -M10000 \

-n8 -R "span[hosts=1]" \

-o refine.%J.o -e refine.%J.e \

python refine.py

-R "select[mem>10000] rusage[mem=10000]" -M10000requests ~10Gb memory.-n8 -R "span[hosts=1]"requests 8 cores on a single worker node.-o refine.%J.o -e refine.%J.ewrites STDOUT and STDERR to files named with the job number.python refine.pyis the command to run.

NB the standard memory request if you don't specify on codon is 15Gb. This is a lot, and you should typically reduce it.

If you want to capture STDOUT without the LSF header add quotes:

bsub -o refine.%J.o -e refine.%J.e "python refine.py > refine.stdout"

You can also make execution dependent on completion of other jobs with the -w flag

e.g. bsub -w DONE(25636). This can be useful in pipeline code.

When I first started using LSF I found a few commands helpful for looking through your jobs:

bjobs -a -x

find . -name "\*.o" | xargs grep -L "Successfully completed"

find . -name "\*.o" | xargs grep -l "MEMLIMIT"

find . -name "\*.e" | xargs cat

Exercise 1: Estimating resource use

You submit your job, frantically type bjobs a few times until you see it start

running, then go off and complete the intermediate programming class feedback form while you wait. When you check

again, no results and a job which failed due to running out of memory, or going over run time. What now?

You can alter your three basic resources as follows:

- CPU time: ask for more by submitting to a different queue.

- CPU time: use less wall time by asking for more cores and running more threads.

- Memory: ask for more in the

bsubcommand.

Ok, but how much more? There are three basic strategies you can use:

- Double the resource request each time.

- Run on a smaller dataset first, then estimate how much would be needed based on linear or empirical scaling.

- Analyse the computational efficiency analytically (or use someone else's analysis/calculator).

The first is a lazy but effective approach for exploring a scale you don't know the maximum of. Maybe try this first, but if after a couple of doublings your job is still failing it's probably time to gather some more information.

Let's try approach two on a real example by following these steps:

- Find the sequence alignment from last week. Start off by cutting down to just the first four sequences in the file.

- Install RAxML version 8. You can get the source here, but it's also more easily available via bioconda.

- Run a phylogenetic analysis and check the CPU and memory use. The command is of the form

raxmlHPC-SSE3 -s BIGSdb_024538_1190028856_31182.dna.aln -n raxml_1sample -m GTRGAMMA -p 1. - Increase the number of samples and retime. I would suggest a log scale, perhaps doubling the samples included each time. Plot your results.

- Use your plot to estimate what is required for the full dataset.

If you have time, you can also try experimenting with different numbers of CPU threads (use the PTHREADS version and -T), and number of sites

in the alignment too.

You can use codon or your laptop to do this.

LSF is really good at giving you resource use. What about on your laptop? Using

/usr/bin/time -v (gtime -v on OS X, install with homebrew) gives you most of the same information.

What about approach three? (view after exercise)

For this particular example, the authors have calculated how much memory is required and even have an online calculator. Try it here and compare with your results.

Adding instrumentation to your code

We said in optimisation that some measurement is better than no measurement.

I am a big fan of progress bars. They give a little more information than just the total time and are really easy to add in. When you've got a function that just seems to hang, seeing the progress and expected completion time tells you whether your code is likely to finish if you just wait a bit longer, or if you need to improve it to have any chance of finishing. This also gives you some more data, rather than just increasing the wall time repeatedly.

In python, you can wrap your iterators in tqdm which gives you a progress bar

which number of iterations done/total, and expected time.

tqdm progress bars in python

GPUs

I have not used the GPUs on the codon cluster, but a few people requested that I cover them.

In the session I will take a moment if anyone has experience they would like to discuss.

The following are a list of things I think are relevant from my use of GPUs (but where I have admin rights and no queue):

- Use

nvidia-smito see available GPUs, current resource use, and driver version. - Using multiple GPUs is possible and easier if they are the same type.

- GPUs have a limited amount device memory, usually around 4Gb for a typical laptop card to 40Gb for a HPC card.

- The hierarchy of processes is: thread -> warp (32 threads) -> block (1-16 warps which share memory) -> kernel (all blocks needed to run the operation) -> graph (a set of kernels with dependencies).

- Various versions to be aware of: driver version (e.g. 410.79) -- admin managed; CUDA version (e.g. 11.3) -- can be installed centrally or locally; compute version (e.g. 8.0 or Ampere) -- a feature of the hardware. They need to match what the package was compiled for.

- When compiling, you will use

nvccto compile CUDA code and the usual C/C++ compiler for other code.nvccis used for a first linker step, the usual compiler for the usual final linker step. pytorchetc have recommended install routes, but if they don't work compiling yourself is always an option.- You can use

cuda-gdbto debug GPU code. Use is very similar togdb. Make sure to compile with the flags-g -G. - Be aware that cards have a maximum architecture version they support. This is the compute version given to the CUDA compiler as sm_XX. For A100 cards this is sm_80.

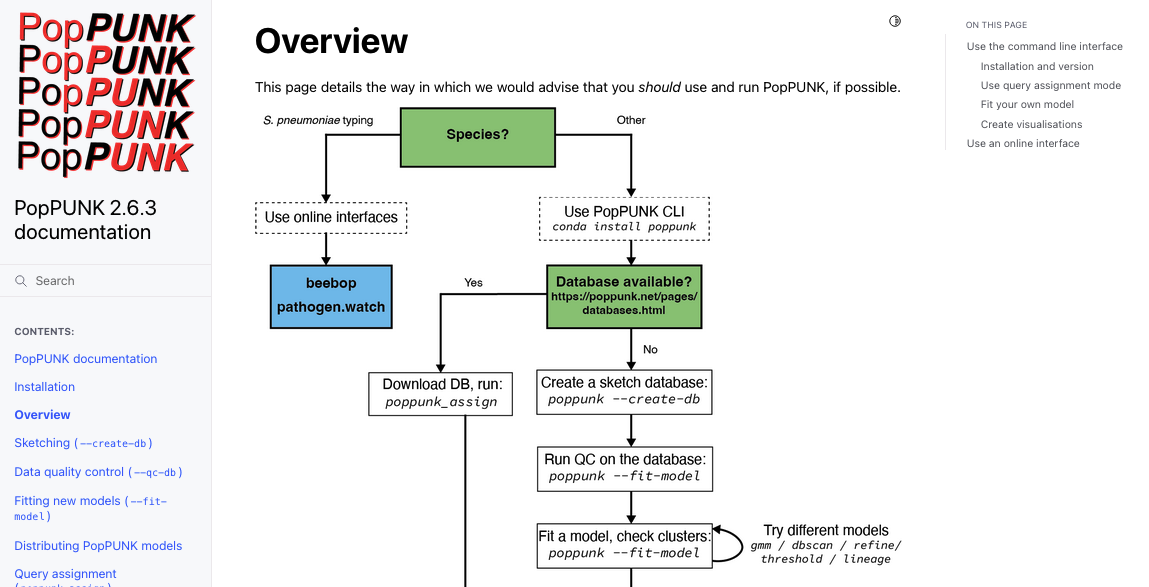

See here for some relevant installation documentation for one of our GPU packages: https://poppunk.readthedocs.io/en/latest/gpu.html

Using GPUs for development purposes? We do have some available outside of codon which are easier to play around with.

Interactive jobs

Just some brief notes for this point:

- Use

bsub -I, and without the-oand-eoptions to run your job interactively, i.e. so you are sent to the worker node terminal as the job runs. - Make sure you are using X11 forwarding to see GUIs. Connect with

ssh -X -Y <remote>, and you may also need Xquartz installed. - If you are using

tmuxyou'll need to make sure you've got the right display. Runecho $DISPLAYoutside of tmux, thenexport DISPLAY=localhost:32(with whatever$DISPLAYcontained) inside tmux. - If you want to view images on the cluster without transferring files, you can

use

display <file.png> &. For PDFs see here.

Ultimately, it's a pain, and using something like OpenStack or a VM in the cloud is likely to be a better solution.

Installing software without admin rights

If possible, install your software using a package manager:

homebrewon OS X.apton Ubuntu (requires admin).conda/mambaotherwise.

conda tips

-

Install miniconda for your system.

-

Add channels in this order:

conda config --add channels defaults

conda config --add channels bioconda

conda config --add channels conda-forge

- If you've got an M1 Mac, follow this guide to use Intel packages.

- Never install anything in your base environment.

- To create a new environment, use something like

conda create env -n poppunk python==3.9 poppunk. - Environments are used with

conda activate poppunkand exited withconda deactivate. - Create a new environment for every project or software.

- If it's getting slow at all, use

mambainstead! Use micromamba to get started. - Use

mambafor CI tasks. - You can explicitly define versions to get around many conflicts, in particular changing the python version is not something that will be allowed unless explicitly asked for.

- For help: https://conda-forge.org/docs/user/tipsandtricks.html.

Also note:

- anaconda: package repository and company.

- conda: tool to interact with anaconda repository.

- miniconda: minimal version of the anaconda repostory and conda.

- conda-forge: general infrastructure for creating open-source packages on anaconda.

- bioconda: biology-specific version of conda-forge.

- mamba: a different conda implementation, faster for resolving environments.

What about if your software doesn't have a conda recipe? Consider writing one! Take

a look at an example on bioconda-recipes and copy it to get started.

Exercise 2: Installing a bioinformatics package

Let's try and install bcftools.

To get the code, run:

git clone --recurse-submodules https://github.com/samtools/htslib.git

git clone https://github.com/samtools/bcftools.git

cd bcftools

The basic process for installing C/C++ packages is:

./configure

make

make install

You can also usually run make clean to remove files and start over, if something goes wrong.

Typically, following this process will try and install the software for all users in the system directory /usr/local/bin

which you won't have write access to unless you are root (admin). Usually the workaround is to

set PREFIX to a local directory. Make a directory in your home called software mkdir ~/software and

then run

./configure --prefix=$(realpath ~/software)

make && make install

This will set up some of your directories in ~/software for you. Other directories

you'll see are man/ for manual pages and lib/ for shared objects used by many

different packages (ending .so on Linux and .dylib on OS X).

If there's no --prefix option for the configure script, you can often override

the value in the Makefile by running:

PREFIX=$(realpath ~/software) make

The last resort is to edit the Makefile yourself. You can also change the optimisation

options in the Makefile by editing the CFLAGS (CXXFLAGS if it's a C++ package):

CFLAGS=-O3 -march=native make

Compiles with the top optimisation level and using instructions for your machine.

Try running bcftools -- not found. You'll need to add ~/software to your PATH

variable, which is the list of directories searched by bash/zsh when you type a command:

export PATH=$(realpath ~/software)${PATH:+:${PATH}}

To do this automatically every time you start a shell, add it to your ~/.bashrc or ~/.zshrc file.

Try running make test to test the installation.

To use the bcftools polysomy command requires the GSL library. Install that next. Ideally you'd just use your package manager, but let's do it

from source:

wget https://ftp.gnu.org/gnu/gsl/gsl-latest.tar.gz

tar xf gsl-latest.tar.gz

./configure --prefix=/Users/jlees/software

make -j 4

make install

Adding -j 4 will use four threads to compile, which is useful for larger projects.

Your software/ directory will now has an include/ directory where headers needed

for compiling/making with the library are stored, and a lib/ directory with

libraries needed for running software with the library are stored.

We need to give these to configure:

./configure --prefix=/Users/jlees/software --enable-libgsl CFLAGS=-I/Users/jlees/software/include LDFLAGS=-L/Users/jlees/software/lib

make

Finally, to run bcftools the gsl library also needs to be available at runtime (as opposed

to compile time, which we ensured by passing the paths above). You can

check which libraries are needed and if/where they are found with ldd bcftools (otool -L on OS X):

bcftools:

/usr/lib/libz.1.dylib (compatibility version 1.0.0, current version 1.2.11)

/usr/lib/libSystem.B.dylib (compatibility version 1.0.0, current version 1319.100.3)

/usr/lib/libbz2.1.0.dylib (compatibility version 1.0.0, current version 1.0.8)

/usr/lib/liblzma.5.dylib (compatibility version 6.0.0, current version 6.3.0)

/usr/lib/libcurl.4.dylib (compatibility version 7.0.0, current version 9.0.0)

/Users/jlees/software/lib/libgsl.27.dylib (compatibility version 28.0.0, current version 28.0.0)

/System/Library/Frameworks/Accelerate.framework/Versions/A/Frameworks/vecLib.framework/Versions/A/libBLAS.dylib (compatibility version 1.0.0, current version 1.0.0)

So libgsl.dylib and libBLAS.dylib are being found ok. If they aren't being found, you need

to add ~/software/lib to the LD_LIBARY_PATH environment variable like you did with the PATH.

Check that you can run bcftools polysomy.

Troubleshooting jobs and prototyping code

Some tips:

- It's ok to run small jobs on the head node, especially to check you've got the arguments right. Then kill them with

Ctrl-Corkillonce they get going. Avoid running things that use lots of memory (these will likely be killed automatically). You can preface your command withniceto lower its priority. - Find a smaller dataset which replicates your problems, but gets to them without lots of CPU and memory use. Then debug interactively rather than through the job submission system.

- Run a simple or example analysis first, before using your real data or the final set of complex options.

- Add print statements through your code to track where the problem is happening.

- If that doesn't find the issue, use a debugger interactively (see last session). For compiled code giving a segfault, this is usually the way to fix it (or with

valgrind). - If you need to debug on a GPU, be aware this will stop the graphics output.

- Try and checkpoint larger tasks. Save temporary or partial results (in python with a pickle, in R with

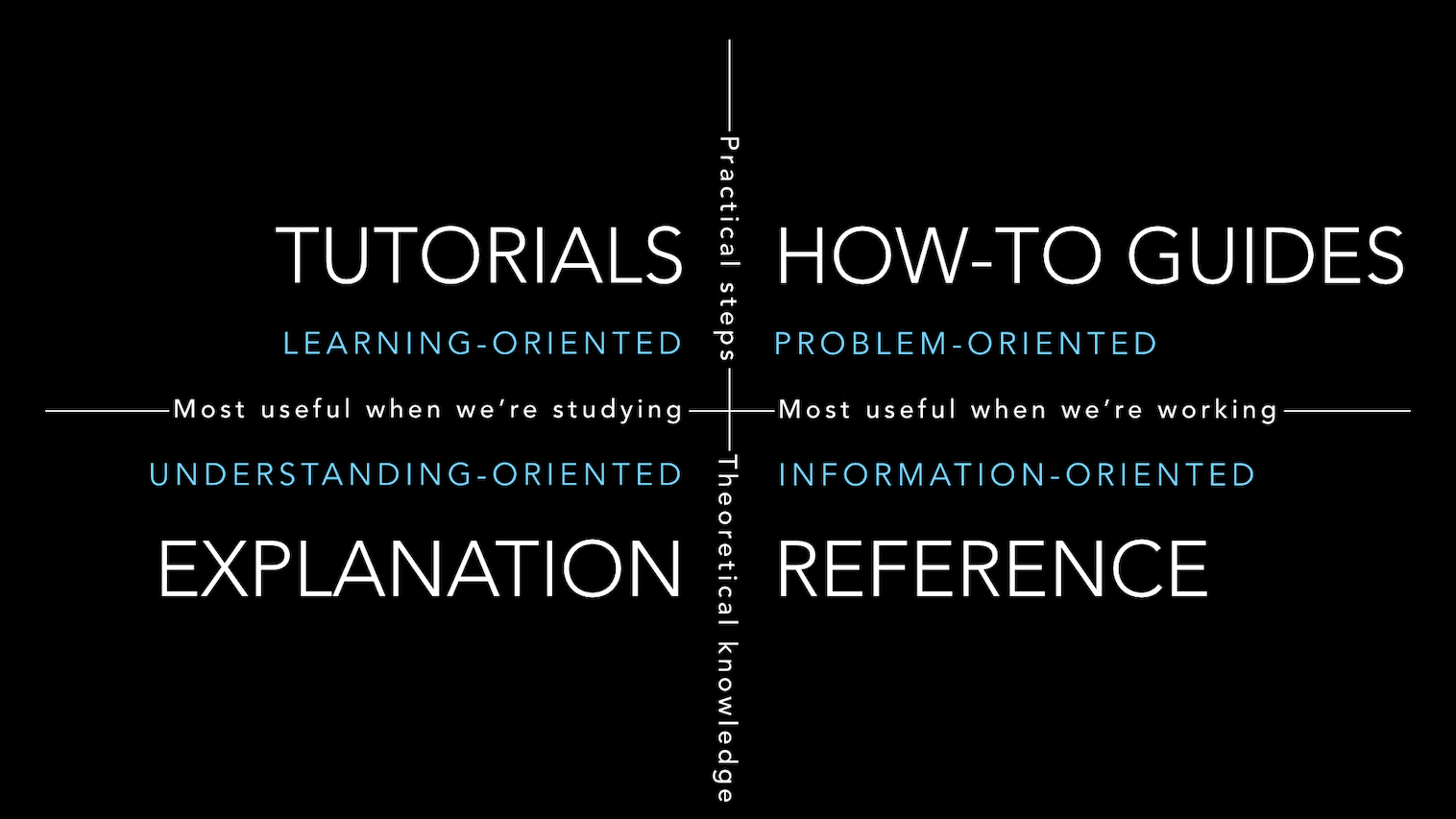

saveRDS). Pipeline managers will help with this and let you resume from where things went wrong. - Split your task into smaller jobs. For example, if you have code which parses genomic data into a data frame, runs a regression on this data frame, then plots the results. Split this into three scripts, each of which saves and loads the results from the previous step (using serialisation).

Job arrays

If you have embarrasingly parallel tasks, i.e. those with no shared memory or dependencies, job arrays can be an incredibly convenient and efficient way to manage batching these jobs. Running the same code on multiple input files is a very common example. In some settings, using a job array can give you higher overall priority.

To use an array, add to your bsub command:

bsub -J mapping[1-1000]%200 -o logs/mapping.%J.%I.o -e logs/mapping.%J.%I.e ./wrapper.py

-Jgives the job a name[1-1000]gives the index to run over. This can be more complex e.g.[1,2-20,43], useful for resubmitting failed jobs.%200gives the maximum to run at a time. Typically you don't need this because the scheduler will sort out priority for you, but if, for example, you are using a lot of temporary disk space for each job it may be useful to restrict the total running at any given moment.- We add

%Ito the output file names which is replaced with the index of the job so that every member of the array writes to its own log file.

The key thing with a job array is that an environment variable LSB_JOBINDEX will be

created for each job, which can then be used by your code to set up the process correctly.

Let's work through an example.

Exercise 3: Two-dimensional job array

Let's say I have the following python script which does a stochastic simulation of an infectious disease outbreak using an SIR model and returns the total number of infections at the end of the outbreak:

import numpy as np

def sim_outbreak(N, I, beta, gamma, time, timestep):

S = N - I

R = 0

max_incidence = 0

n_steps = round(time/timestep)

for t in range(n_steps):

infection_prob = 1 - np.exp(-beta * I / N * timestep)

recovery_prob = 1 - np.exp(-gamma * timestep)

number_infections = np.random.binomial(S, infection_prob, 1)

number_recoveries = np.random.binomial(I, recovery_prob, 1)

S += -number_infections

I += number_infections - number_recoveries

R += number_recoveries

return R

def main():

N = 1000

I = 5

time = 200

timestep = 0.1

repeats = 100

beta_start = 0.01

beta_end = 2

beta_steps = 100

gamma_start = 0.01

gamma_end = 2

gamma_steps = 100

for beta in np.linspace(beta_start, beta_end, num=beta_steps):

for gamma in np.linspace(gamma_start, gamma_end, num=gamma_steps):

sum_R = 0

for repeat in range(repeats):

sum_R += sim_outbreak(N, I, beta, gamma, time, timestep)

print(f"{beta} {gamma} {sum_R[0]/repeats}")

if __name__ == "__main__":

main()

The main loop runs a parameter sweep over 100 values of beta and gamma (so \(10^4\) total), and runs 100 repeats (so \(10^6\) total). Each of these parameter combinations takes around 3s to run on my machine, so we'd be expecting about 8 hours total. We could of course use some tricks from last week to improve these, but instead lets use a job array to parallelise.

Do the following:

- Change the

beta_stepsandgamma_stepsto 5 (so we don't all hammer the cluster too hard). - Use

import osandjob_index = os.environ['LSB_JOBINDEX']to get the job index at the start of the main function. - Replace the outer loop over beta with this index, so that the job runs a single beta value, but the full loop over gamma.

- Write an appropriate

bsubcommand to submit this is a job array. Use the-q shortqueue. - Think about how you would collect the results from all of your jobs (using either bash or by modifying the code).

If you have time:

- Also replace the gamma loop, so that each job in the array (of 25 jobs) runs just a single beta and gamma value, but the full repeat loop.

- Change the print statement so it's easier to collect your results at the end.

It may help to review the section on strides from the previous session when you are thinking about how to map a 1D index into two dimensions.

One other note: here we are using random number generators across multiple processes. If they have the same seed, which may be the default, they will produce identical results and likely be invalid repeats. Even if they are set to different seeds (either by using the job index or by using the system clock), after some time they will likely become correlated, which may also cause problems. See Figure 3 in this paper for how to fix this.

Reproducibility on the HPC

Tips based on what has worked for me:

- Keeping track of your command history goes a long way. Set up your history options with unlimited scrollback and timestamps.

- Search through history in the following order:

- Type the start of your command, then press up.

- Use Ctrl-r to search for part of your command.

- Use

history | grepfor more complex searches. e.g.history | grep sir.py | grep bsubwould find when thesir.pyscript was submitted with bsub. You can use-Cto add context and see other commands. Or usehistory | lessand the search function to find the sequence of commands you used.

- Be careful with editing files in a text editor on the cluster -- this command cannot just be rerun and it won't be clear what you did.

- Use git for everything you write, and commit often.

- For larger sets of jobs, particularly which you will run repeatedly or expect some failures, it may be worth using pipeline software (snakemake, nextflow) to connect everything together. Ususally they can handle the

bsub/SLURM submission for you. - Keep your code and data separate.

- Don't modify your input data, instead make intermediate files.

- Don't overwrite files unless you have to. Regularly clean and tidy files you are working on.

- Call your files and directories sensible names and write a

READMEif they get complicated. - Think about your main user: future you. Will you be able to come back to this in a month's time? Three year's time?

- Use

-oand-ewith all jobs, and write tologs/folders. You can easilytarthese once you are done. - Make plots on your local machine (with code that can be run through without error or needing the current environment). Transfer smaller results files from the HPC to make your plots.

- Use conda environments to make your software versions consistent between runs. Use

conda listto get your software and versions when you write papers.

Compiled languages

Before starting this session please review the prerequisites. You should at least have the rust toolchain installed.

In this module you will be introduced to a compiled language called rust. There are three parts:

a. Introduction (this session). A description of basics in rust. No main exercise.

b. Data structures. A description of some more complex types in rust. Exercise: k-mer counter.

c. Object-oriented programming. Using objects (structs) to write your code. Exercise: Gaussian blur.